The video highlights the work and ideas of Geoffrey Hinton, an eminent figure in Artificial Intelligence, focusing on Hopfield nets and Boltzmann machines. Hinton, taking on the challenge of explaining complex AI concepts to a general audience, elaborates on the intricate design of networks that can simulate human memories or interpretations by minimizing energy states. He introduces the notion of energy in Hopfield networks as a representation of memory storage and retrieval, and discusses how such networks find low-energy states that correspond to optimal solutions or memory retrieval, despite their complex configurations.

Hinton further explains the concept of Boltzmann machines and their probabilistic decision-making processes that aim for thermal equilibrium. He discusses the potential applications of these networks in interpreting ambiguous visual data, like 3D images from 2D inputs. He delves into the problems of local minima and the slowness of these machines, while illustrating the process of learning in such networks over time and their use in practical applications, like the Netflix recommendation system and speech recognition improvements at Google.

Main takeaways from the video:

Please remember to turn on the CC button to view the subtitles.

Key Vocabularies and Common Phrases:

1. emeritus [ɪˈmɛrətəs] - (adjective) - Retired but allowed to keep their title as an honor. - Synonyms: (retired, honorary, distinguished)

In 1987, he was appointed professor of Computer Science at the University of Toronto, Canada, where he presently as emeritus Professor.

2. hopfield net [ˈhɒpfiːld nɛt] - (noun) - A type of recurrent artificial neural network that serves as a content-addressable memory system with binary threshold nodes. - Synonyms: (neural network, associative network, memory network)

So first I have to explain hopfield nets, and I'm going to explain the version with binary neurons that have states of 1 or 0.

3. configuration [kənˌfɪgjʊˈreɪʃən] - (noun) - An arrangement of elements in a particular form, figure, or combination. - Synonyms: (arrangement, setup, formation)

The global state of a whole network is called a configuration, just so we seem a bit like physics.

4. stochastic [stəˈkæs.tɪk] - (adjective) - Randomly determined; having a random probability distribution or pattern that may be analyzed statistically but may not be predicted precisely. - Synonyms: (random, probabilistic, aleatory)

But the solution to that is to have noisy neurons, stochastic binary neurons, they still only have binary states.

5. thermal equilibrium [ˈθɜrməl ˌiː.kwɪˈlɪb.ri.əm] - (noun) - A condition where all parts of a system are at the same temperature and no heat flows between them. - Synonyms: (temperature balance, energy balance, thermal balance)

The system will eventually approach what's called thermal equilibrium.

6. boltzmann distribution [ˈboʊlts.mən ˌdɪstrɪˈbjuːʃən] - (noun) - A probability distribution used to describe the distribution of energy states in physical systems at thermal equilibrium. - Synonyms: (probability distribution, statistical distribution, energy distribution)

It settles to a particular distribution called the boltzmann distribution.

7. probabilistic [ˌprɒbəˈbɪlɪstɪk] - (adjective) - Based on or affected by probability; involving chance or uncertainty. - Synonyms: (chance, probabilistic, possible)

They're always binary, but they make just these probabilistic decisions.

8. content addressable memory [ˈkɒntɛnt əˈdrɛsəbl ˈmɛməri] - (noun) - A storage system that can retrieve data based on the content rather than the address where the data is stored. - Synonyms: (associative memory, CAM, recallable storage)

So settling to energy minima when they represent memories is a way of having a content addressable memory.

9. backpropagation [ˈbækˌprɒpəˌgeɪʃən] - (noun) - A method used in machine learning to calculate the gradient of the loss function with respect to the weights of the network. - Synonyms: (gradient descent, neural training, weight adjustment)

The reason that's surprising is because in an algorithm like backpropagation, which is what all the neural nets now actually use, you require a backward pass to convey information about the other weights.

10. learnability [lɜːrnəˈbɪlɪti] - (noun) - The capacity of being learned or the ease with which something can be learned. - Synonyms: (teachability, comprehensibility, understandability)

But if you're a chemist, you know that enzymes are useful things. And even though RBMs are no longer used, they allowed us to make the transition from thinking that deep neural networks would never work to seeing that deep neural networks actually could be made to work rather easily if you initialize them this way, once you've made the transition, you don't need the enzyme anymore.

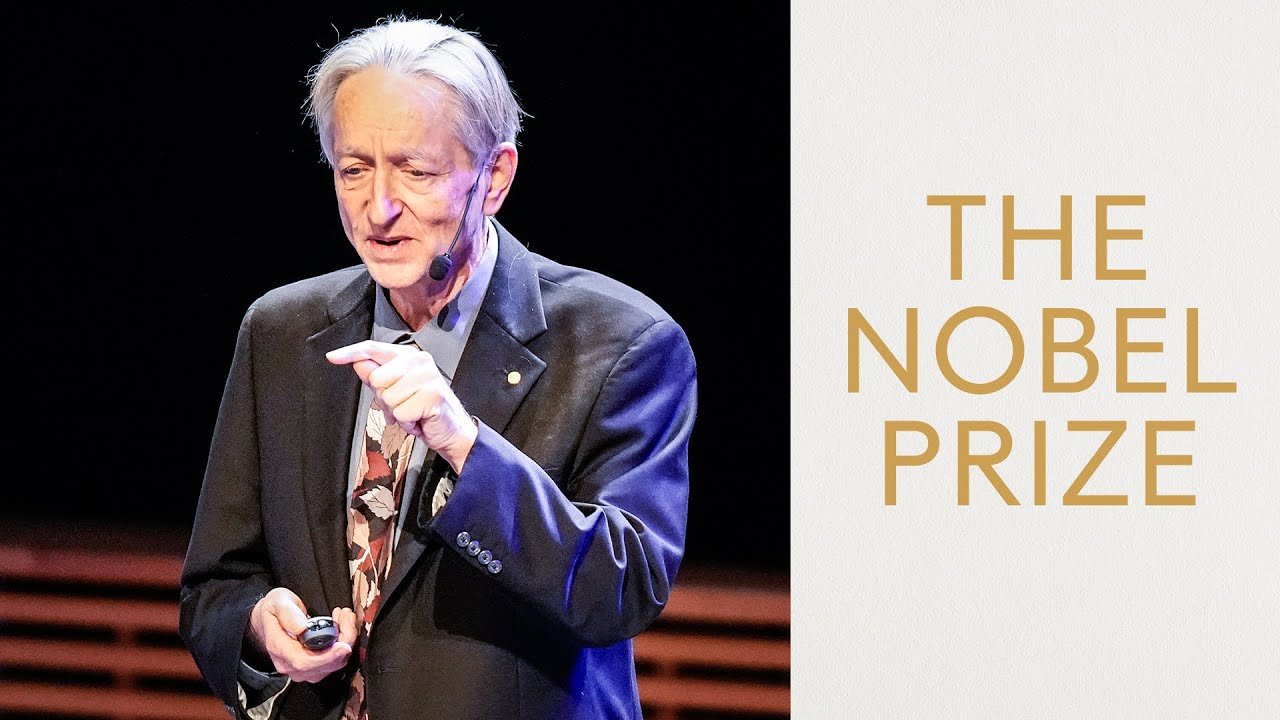

Nobel Prize lecture - Geoffrey Hinton, Nobel Prize in Physics

It is now my pleasure and great honor to introduce our second speaker, Geoffrey Hinton. Geoffrey Hinton was born in London, UK in 1947. He received a bachelor degree in Experimental psychology from Cambridge University. In 1970. 1978, he was awarded a PhD in Artificial Intelligence from the University of Edinburgh. After postdoctoral research, he worked for five years as a faculty member in Computer science at Carnegie Mellon University in Pittsburgh. In 1987, he was appointed professor of Computer Science at the University of Toronto, Canada, where he presently as emeritus Professor. Between 2013 and 2023, he shared his time between academic research and Google Brain. Please join me in welcoming Geoffrey Hinton to the stage to tell us about the developments that led to this year's Nobel Prize in Physics.

So today I'm going to do something very foolish. I'm going to try and describe a complicated technical idea for a general audience without using any equations. So first I have to explain hopfield nets, and I'm going to explain the version with binary neurons that have states of 1 or 0. So on the right there you'll see a little hopfield net. And the most important thing is the neurons have symmetrically weighted connections between them. The global state of a whole network is called a configuration, just so we seem a bit like physics. And each configuration has a goodness. And the goodness of configuration is simply the sum of all pairs of units of neurons that are both on of the weights between them. So those weights in red boxes, you add those up and you get four. Hopefully that's the goodness of that configuration of the network.

And the energy is just minus the goodness. So these networks will settle to energy minima. The whole point of a hopfield net is that each neuron can locally compute what it needs to do in order to reduce the energy, where energy is badness. So if the total weighted input coming from other active neurons is positive, the neuron should turn on. If, if the total weighted input coming from other active neurons is negative, it should turn off. And if each neuron just keeps using that rule, and we pick them at random and keep applying that rule, we will eventually settle to an energy minimum.

So the configuration on the right there is actually an energy minimum. It has an energy of -4. And if you take any neuron there, the ones that are on want to stay on. They get total positive input. The ones that are off want to stay off. They get total negative input. But it's not the only energy minimum. A hopfield net can have many energy minima, and where it ends up depends on where you start it and also on the sequence of random decisions you make if. Sorry, the sequence of random decisions about which neuron to update.

So that's a better energy minimum. Now we've turned on the triangle of units on the right, and that's got a goodness of three plus three minus one is five. And so an energy minus five, that's a better minimum. Now Hopfield proposed that a good way to use such networks is to make the energy minima correspond to memories. And then using that binary decision rule about whether you should turn a neuron on or off, that can clean up incomplete memories. So you start with a partial memory and then you just keep applying this decision rule and it will clean it up. So settling to energy minima when they represent memories is a way of having a content addressable memory. You can access an item in the memory by just turning on some of the item and then using this rule, and it'll fill it out.

Terry Sinofsky and I, Terry was a student of Hopfields, proposed a different use for these kinds of nets. Instead of using them to store memories, we could use them to construct interpretations of sensory input. So the idea is you have a net, it has both visible neurons and hidden neurons. The visible neurons are where you show it a sensory input, maybe a binary image. The hidden neurons are where it constructs the interpretation of that sensory input. And the energy of a configuration of the network represents the badness of the interpretation. So we want low energy interpretations.

So I'm going to give you a concrete example. Consider that ambiguous line drawing at the top. People have two ways of seeing that. There's interpretation one, which is normally what you see first, there's another interpretation. And when you see it as a convex object, that's clearly a different 3D interpretation of the same 2D line drawing. So could we make one of these networks come up with two different interpretations of the same line drawing? Well, we need to start by thinking what a line in an image tells you about 3D edges.

So that green line is the image plane. Imagine you're looking through a window and you're drawing the edges in the scene out there in the world on the window. So that little black line is a line in the image, and the two red lines are the lines of sight that come from your eye through the ends of that line. And if you ask, well, what edge in the world could have caused that? Well, there's many edges that could have caused it. There's one edge that could have caused that 2D line, but there's another one. And there's another one, and there's another one. All of these edges will cause the same line in the image.

So the problem of vision is to go backwards from the single line in the image to figure out which of these edges is really out there. You can only see one of them at a time if objects are opaque, because they all get in each other's way. So you know that that line in the image has to depict one of these edges, but you don't know which one. We could build a network where we started off by turning the lines into activations of line neurons. So let's suppose we already have that. We have a large number of neurons to represent lines in the image, and we turn on just a few of them to represent the lines in this particular image.

Now, each of those lines could depict a number of different 3D edges. So what we do is we connect that line neuron to a whole bunch of 3D edge neurons with excitatory connections. Those are the green ones. But we know we can only see one of those at a time, so we make those edge neurons inhibit each other. So now we've captured a lot about the sort of optics of perception. We do that for all of our line neurons. And now the question is, which of those edge neurons should we turn on for that? We need more information, and there's certain principles we use in interpreting images.

If you see two lines in an image, you assume that if they join in the image, they join in depth where they join, that is, they're at the same depth where the two lines join in the image. So we can put in extra connections for that. We could put in a connection between every pair of 3D edge neurons that join in depth at the point where they have the same end. We could put in a stronger connection if they join at right angles. We really like to see images in which things join at right angles. So we put in a whole bunch of connections like that.

And now what we hope is, if we set the connection strengths right, that we've got a network which has two alternative states, it can settle to corresponding to those two alternative interpretations of the Necker cube. This gives rise to two main problems. The first problem, if we're going to use hidden neurons to come up with interpretations of images represented in the states of the visible neurons, is the search issue. How do we avoid getting trapped in local optima? We might settle to a rather poor interpretation and not be able to jump out of it to a better interpretation.

And the second problem is, is learning. I sort of Implied, I'd put in all those connections by hand. But we'd like a neural network to put in all those connections. So the first research, the search problem, we solve more or less by making the neurons noisy. So if you have deterministic neurons, like in a standard hopfield net, if the system settled into one energy minimum, like A, so the ball there is the state of the whole, it's the configuration of the whole system. It can't get from A to B because the decision rule for the neurons only allows things to go downhill in energy.

And the graph on the right is the decision rule. If the input's positive, turn on, if the input's negative, turn off, we would like to be able to get from A to B. But that means we have to go uphill in energy. And the solution to that is to have noisy neurons, stochastic binary neurons, they still only have binary states. Their states are either 1 or 0, but they're probabilistic. If they get a big positive input, they almost always turn on. With a big negative input, they almost always turn off. But if the input is soft, if it's somewhere near zero, then they behave probabilistically.

If it's positive, they usually turn on, but occasionally turn off. And if it's small negative input, they usually turn off, but occasionally turn on, but they don't have real values. They're always binary, but. But they make just these probabilistic decisions. And so now if you want to interpret a binary image using these hidden neurons, what we do is we clamp the binary image on the visible units that specifies what the input is, and then we pick a hidden neuron at random. We look at the total input it's getting from the other active hidden neurons, and we start them all off in random states.

And if it gets total positive input, we probably turn it on, but we might just turn it off if it's only a small positive input. So we keep implementing this rule of turn them on if they big positive input off, if they're big negative input. But if they're soft, make probabilistic decisions. And if we go round and we keep picking hidden neurons and doing that, the system will eventually approach what's called thermal equilibrium. That's a difficult concept for non physicists, and I'll explain it later. Once it's reached thermal equilibrium, the states of the hidden neurons are then an interpretation of that input.

So in the case of that line drawing, the hidden neurons, you hopefully have one hidden neuron turned on for each line unit. And you get an interpretation which will be one of those two interpretations of the Necker cube. And what we hope is that the low energy interpretations will be good interpretations of the data. So for this line drawing, if we could learn the right weights between the 2D line neurons and the 3D edge neurons, and learn the right weights between the 3D edge neurons, then hopefully the low energy states of the network would correspond to good interpretations, namely seeing 3D rectangular objects.

So, thermal equilibrium, it's not what you first expect, which is that the system is settled to a stable state. What's stabilized is not the state of the system. What's stabilized is a far more abstract thing that's hard to think about. It's the probability distribution over configurations of the system that's very hard for a normal person to think about. It settles to a particular distribution called the boltzmann distribution. And in the boltzmann distribution, the. The probability, once it's settled to thermal equilibrium, of finding the system in a particular configuration is determined solely by the energy of that configuration.

And you have more probability of finding it in lower energy configurations. So at thermal equilibrium, the good states, the low energy states, are more probable than the bad states. Now, to think about thermal equilibrium, there's a trick physicists use, and it allows ordinary people to understand this concept. Hopefully you just imagine a very large ensemble, gazillions of them, of identical networks. You have these gazillion Hopfield networks. They all have exactly the same weights, so they're the same system, essentially. But you start them all off in different random states, and they all make their own independent random decisions. And there'll be a certain fraction of the systems that have each configuration.

And to begin with, that fraction will just depend on how you started them off. Maybe you start them off randomly, so all configurations are equally likely. And in this huge ensemble, you'll get equal numbers of systems in every possible configuration. But then you start running this algorithm of update neurons in such a way that they tend to lower the energy, but occasionally allow it to go up. And gradually what will happen is the fraction of the systems in any one configuration will stabilize. So any one system will be jumping between configurations, but the fraction of all the systems in a particular configuration will be stable.

So one system may leave a configuration, but other systems will go into that configuration. This is called detailed balance. And the fraction of systems will stay stable. That's it for the physics. So let's imagine generating an image now, not interpreting an image, but generating an image. To generate an image, you start by picking Random states for all of the neurons, the hidden neurons, and the visible neurons. Then you pick a hidden or visible neuron and you update its state using the usual stochastic rule. If it's got lots of positive input, probably turn it on. Lots of negative input, probably turn it off.

If it's soft, it behaves a bit stochastically, and you keep doing that. And if you keep doing that repeatedly until the systems approach thermal equilibrium, then you look at the states of the visible units, and that's now an image generated by this network from the distribution it believes in, the boltzmann distribution, in which low energy configurations are much more likely than high energy configurations. But it believes in many possible alternative images, and you can pick one of them, one of the things it believes in, by running this process. Okay, so now what's the aim of learning in a Boltzmann machine?

The aim of learning in a Boltz machine is to make it so. When the network's generating images, think of it as dreaming. It's just randomly imagining things. When it's generating images, those images look like the images it perceives when it's doing perception on real images. If we can achieve that, then the states of the hidden neurons will actually be a good way to interpret the real images. They'll capture the underlying causes of the image. At least that's the hope. Another way of saying that is learning the weights in the network is equivalent to figuring out how to use those hidden neurons so that the network will generate images that look like the real images.

That seems like an extremely hard problem. Everybody thought, that's going to be very complicated. It turns out Terry and I had an outrageously optimistic approach. The question is, could you start with a neural net, a HOPF field net of this stochastic kind of hopfield net that has lots of hidden neurons, and they just have random weights between them, and they have random weights connecting them to the visible neurons. So it's a big random neural net. And then you just show it lots of images. And we're hoping for something that seems ridiculous, which is that on perceiving lots of real images, it will create all the connections between the hidden units and between the hidden units and visible units.

It'll weight those connections correctly so that it comes up with sensible interpretations of images in terms of causes, like 3D edges that join at right angles. That sounds very optimistic. And you might have thought that the learning algorithm to do that would be very complicated. The amazing thing about Boltzmann machines is there's a very simple learning algorithm that will do that. This was discovered by Terry Sinofsky and me in 1983. And the learning algorithm goes like this. It has two phases.

There's a wake phase. That's the phase when the network is being presented with images. You clamp an image on the visible units, you let the hidden units rattle around and settle down to thermal equilibrium. And then once the hidden units are at thermal equilibrium with the visible neurons, for every pair of connected neurons, either two hiddens or a visible and a hidden, if they're both on, you add a small amount to the weight between them. That's a pretty simple learning rule. That's a learning rule that people who believe in Donald Hebb would like.

Then there's a sleep phase. Obviously, if you just run. Yeah, if you just run the wake phase, the weights will only get bigger and pretty soon they'll all be positive and all the neurons will turn on all the time. That's not much good. You need to combine it with a sleep phase. And in the sleep phase, you can think of the network as dreaming. You, you're settling to thermal equilibrium by updating the states of all the neurons, the hidden ones and the visible ones. And once you've done that and reached thermal equilibrium, for every pair of connected neurons, if they're both on, you subtract a small amount from the weight between them.

That's a pretty simple learning algorithm, and it's pretty amazing that it does the right thing. So on average, that learning algorithm changes the weights so so as to increase the probability that the images the network generates when it's dreaming will look like the images it sees when it's perceiving and not for the general audience. So you mustn't read this next two lines. For statisticians and machine learning people, what that algorithm is doing is in expectation. That means it's doing it very noisily and often does the wrong thing. But on average, in expectation, it follows the gradient of the log likelihood that is in expectation.

What it's doing is making it more likely that the network will generate when it's dreaming the kinds of images it sees when it's awake. Or to put it another way, the weights change so that the images the network finds plausible, low energy images resemble the images that it sees when it's awake. And what the learning is doing, of course, what's happening in that learning algorithm is in the wake. You're lowering the energy of the whole configurations of the network that it arrives at when it sees real data and when it's Asleep, you're raising the energy of those configurations.

So what you're trying to make it do is believe in what you see when you're awake and unbelieve in what you dream when you're asleep. Okay, so if you ask what the process of settling to thermal equilibrium achieves, it achieves something amazing, which is that everything that one weight in the network needs to know about all the other weights, and to know how to change one weight, you need to know something about all the other weights. They all interact, but everything you need to know shows up in the difference between two correlations. It shows up in the difference between how often the two neurons are on together when the network's observing data and how often they're on together when the network isn't observing data when it's streaming.

And somehow those correlations measured in those two situations tell a weight everything it needs to know about all the other weights. The reason that's surprising is because in an algorithm like backpropagation, which is what all the neural nets now actually use, you require a backward pass to convey information about the other weights. And that backward pass behaves very differently from the forward pass. In the forward pass, you're communicating activities of neurons to later layers of neurons. In the backward pass, You. You're conveying sensitivities.

You're conveying a different kind of quantity altogether. And that makes backpropagation rather implausible as a theory of how the brain works. And so when Terry came up with this theory, this learning procedure for Boltzmann machines, we were completely convinced that must be how the brain works. And we decided we were going to get the Nobel Prize in physiology or medicine. It never occurred to us that if it wasn't how the brain works, we could get the Nobel Prize in physics. Okay, there's only one problem.

And the problem is that settling to thermal equilibrium is a very slow process for very big networks with large weights. If the weights are very small, you can do it quickly. But when the weights are big, after it's learned some stuff, it's very slow. So, actually, Boltzmann machines are a wonderful, romantic idea. They're this beautiful, beautifully simple learning algorithm, which is doing something very complicated. It's constructing these whole networks of hidden units that interpret the data by using a very simple algorithm.

And the only thing is that they're just much too slow. So that was that for Boltzmann machines, and the lecture should really have ended there. But 17 years later, I realized that if you restrict Boltz machines a lot and just have hidden units that aren't connected to each other, then you can get a much faster learning algorithm. So if there's no connection between the hidden neurons, then the wake phase becomes very simple. What you do is you clamp an input on the visible units to represent an image.

And then in parallel, now you can update all the hidden neurons, and you've now reached thermal equilibrium. You just update them all. Once they just look at the visible input and randomly pick one of their two states based on how much input they're getting. And now you're at thermal equilibrium in one step. That's great for the hidden neurons. You still have a problem in the sleep phase. You have to put the network in some random state, update the hidden neurons, update the visible neurons, update the hidden neurons, update the visible neurons, and you have to go on a long time to reach thermal equilibrium. And so the algorithm is still hopeless.

But it turns out there's a shortcut. The shortcut doesn't quite do the right thing, which is embarrassing, but it works pretty well in practice. So the shortcut works like this. You put data on the visible units, that's an image, and then you update all the hidden neurons in parallel, and they've now reached thermal equilibrium with the data. You now update all the visible units, and you get what we call a reconstruction. It's going to be like the data, but not quite the same. Now you update all the hidden units again. And then you stop.

That's it, you're done. And the way you do learning is you measure how often the neurons I and J, the visible neuron I and the hidden neuron J are on together when you're showing it data, and it's reached equilibrium with data, and you measure how often they're on together when you're showing it reconstructions, and it's reached equilibrium with the reconstruction. And that difference is your learning algorithm. You just change the weights in proportion to that difference. And that actually works pretty well. And it's much, much faster. It's fast enough to make Boltzman machines finally practical.

So, okay, so Netflix actually used restricted Boltzmann machines combined with other methods to decide which new movies to suggest you look at based on the preferences of all sorts of other users who are a bit like you. And they actually worked. They won the competition. This combination of Boltzmann machines and these other methods won the Netflix competition for how well can you predict what users will like? But of course, with just hidden neurons that aren't connected to each other, you can't build layers of Feature detectors, which are what you need for doing recognizing objects in images or recognizing words in speech.

And it looks like there's a strong restriction of having just one layer of hidden units without connections between them. But actually, you can get around that. So what you can do is you can stack these restricted Boltzmann machines. What you do is you take your data, you show the restricted Boltzmann machine RBM the data. It has just one hidden layer. And using this contrast divergence algorithm that just goes up and down and up again, you learn some weights so that the hidden units capture structure in the data. The hidden units turn into feature detectors that capture commonly correlated things in the data.

Then you take those hidden hidden activity patterns, the binary activity patterns in the hidden units, and you treat those as data. So you just copy those into another rbm, and they're the data for the other rbm. And that second RBM looks at these features that have captured correlations in the data, and it captures correlations between those features. And you keep going like that. So you're capturing more and more complicated correlations. And so you can learn the second set of weights, W2, and you can do it as many times as you like. Let's learn a third set of weights.

So now we've got a bunch of separate Boltzmann machines, each of which is finding structure among the hidden units of the previous Boltzmann machine. Then what you can do is you can stack up these Boltzmann machines and just treat them as a feed forward net. So ignore the fact the connections are symmetrical. Just use the connections in one direction. Now, because you've got a way of getting. In your first hidden layer, you. You've extracted features that capture correlations in the raw data. And then in your second hidden layer, you've extracted features that capture correlations in the features extracted in the first hidden layer, and so on. So you're getting more and more abstract features, correlations among correlations.

Once you've stacked them up like that, then you can just add a final hidden layer like this, and you can do supervised learning, that is now you can start telling it about the names of things like cat and dog. Those are the class labels, and you're going to have to learn the weights to those class labels. But you start with this network that you've initialized by learning a stack of Boltzmann machines, and two beautiful things happen. The first beautiful thing is if you initialize this way, the network learns much faster than if you initialize with random weights, because it's already learned A whole bunch of sensible features for, for modeling structure in the data.

It hasn't learned anything about what things are called, but it's learned about the structure in the data. And then learning what things are called is relatively quick. Just like with small children, they don't have to be told, that's a cow 2000 times before they know that's a cow. They figure out the concept of cow for themselves, and then their mother says, that's a cow, and they've got it, well, maybe twice. So it makes it much faster to learn, for example, to recognize objects and images. It also makes the networks generalize much better because they've done most of the learning without using labels.

They don't need many labels now. They're not extracting all the information from the labels, they're extracting the information from the correlations in the data. And that makes them generalize much better with needing far fewer labels. So that was all very nice. And between about 2006 and 2011, people were using, particularly in my lab and Yoshua Benjo's lab and Yan's lab, people were using stacks of RBMs to pre train feed forward neural networks, and then they would apply back propagation.

And in 2009, two students in my lab, George Dahl and Abdul Rahma Mohammed, showed that this technique worked a little bit better than the best existing techniques for recognizing fragments of phonemes in speech. And that then changed the speech recognition community. My graduate students went off to the various leading speech groups, and in 2012, things based on exactly this stacked up. Restricted Boltzmann machines went into production at Google, and they got better speech recognition. Suddenly, the speech recognition on the Android got a lot better.

Unfortunately for Boltzmann machines, once we'd shown that these deep neural networks really worked very well if you pre trained them with stacks of Boltzmann restricted Boltzmann machines, people found other ways of initializing the weights, and they no longer use stacks of Boltzmann machines. But if you're a chemist, you know that enzymes are useful things. And even though RBMs are no longer used, they allowed us to make the transition from thinking that deep neural networks would never work to seeing that deep neural networks actually could be made to work rather easily if you initialize them this way, once you've made the transition, you don't need the enzyme anymore.

So think of them as historical enzymes. The idea of using unlearning during sleep, though, to get an algorithm that's more biologically plausible and avoids the backward path of back propagation. I still think there's a lot of mileage in that idea. And I'm still optimistic that when we do eventually understand how the brain learns, it will turn out to involve using sleep to do unlearning. So I'm still optimistic, and I think I'm done. Very nice. Thank you very much. So please join me now in welcoming both laureates on the stage. To join jointly receive our warmest applause. It.

ARTIFICIAL INTELLIGENCE, SCIENCE, TECHNOLOGY, NEURAL NETWORKS, PHYSICS, GEOFFREY HINTON, NOBEL PRIZE