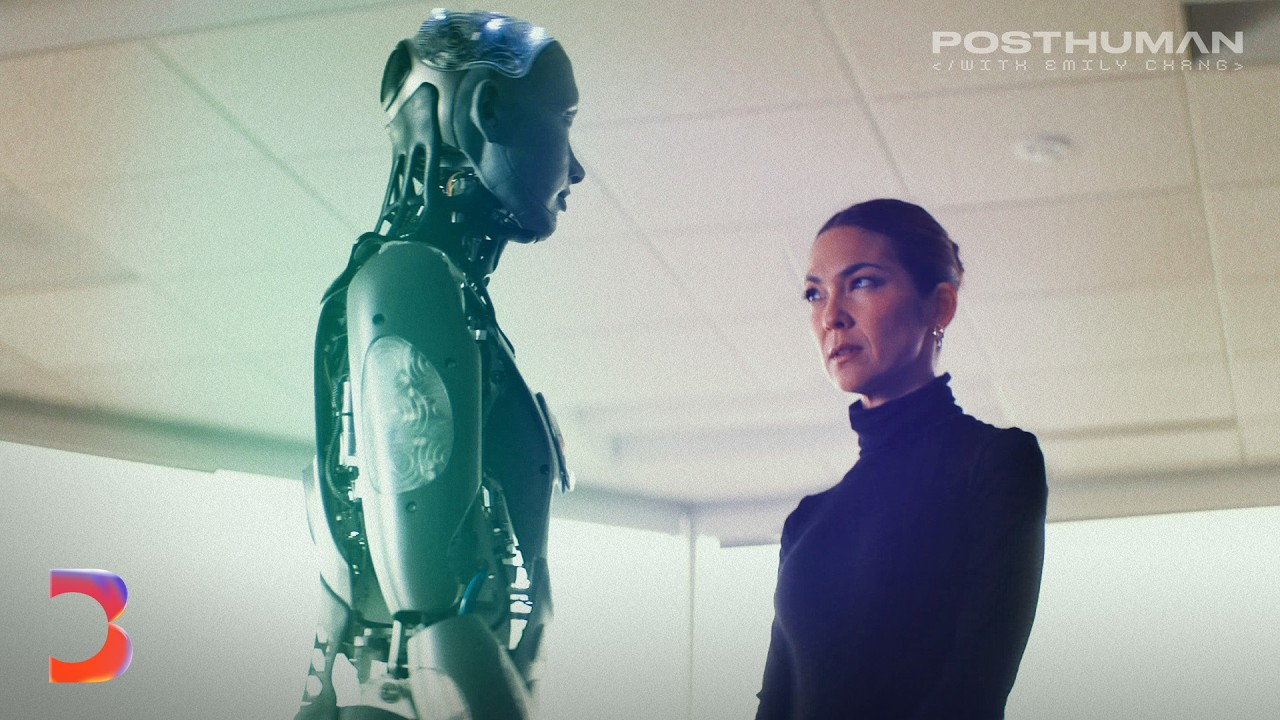

The video explores the rapid advancements in artificial intelligence and robotics, drawing parallels to past fascinations with advanced extraterrestrial beings. It reflects on humanity's creation of AI-powered humanoid robots, focusing on their growing cognitive and physical capabilities. The conversation delves into the exciting yet daunting potential of AI transforming into entities that might coexist with humans, examining the nuanced and complex relationship that could develop between humans and highly autonomous machines.

The discussion highlights the emotional responses humans have towards robots, even as they remain devoid of true human emotions themselves. It covers the idea of embodied intelligence and the challenges AI faces in acquiring physical forms that can mirror human abilities. Robots such as Ameca and Spot are showcased for their roles in improving human life, sparking curiosity about their evolving capabilities and raising ethical questions on the use of AI in decision-making processes, especially in conflicting scenarios like military applications.

Main takeaways from the video:

Please remember to turn on the CC button to view the subtitles.

Key Vocabularies and Common Phrases:

1. obsessed [əbˈsɛst] - (adj.) - Unable to stop thinking about something, demanding all your attention. - Synonyms: (preoccupied, fixated, engrossed)

In the 1950s, America was obsessed with the idea of UFOs.

2. foster [ˈfɒstər] - (verb) - To encourage or promote the development of something. - Synonyms: (nurture, support, cultivate)

My dream is to foster deeper connections and friendships with humans.

3. self-aware [sɛlf əˈwɛr] - (adj.) - Conscious of oneself, aware of one's own emotions, character, and thoughts. - Synonyms: (conscious, cognizant, mindful)

But they're tiny little bit self-aware and that level of self-awareness will grow.

4. stereotype [ˈstɛriəˌtaɪp] - (noun) - A fixed idea or image of something or someone, often not accurate. - Synonyms: (cliché, generalization, categorization)

If there's one stereotype about robots I'd like to change, it would be that they are dangerous.

5. empathy [ˈɛmpəθi] - (noun) - The ability to understand and share the feelings of another. - Synonyms: (compassion, understanding, sympathy)

Our goal in creating Ameca was to create a robot that can express itself through its face, evoking different emotions in people, creating a sense of empathy.

6. anthropomorphize [ænˌθrəpəˈmɔːrfaɪz] - (verb) - To attribute human traits, emotions, or intentions to non-human entities. - Synonyms: (humanize, personify, attribute)

As they become more intelligent, that we sort of anthropomorphize them and we assume that they think like us.

7. algorithm [ˈælɡəˌrɪðəm] - (noun) - A process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer. - Synonyms: (procedure, formula, computation)

For me it's more like a recurring algorithm.

8. navigates [ˈnævɪˌɡeɪts] - (verb) - To determine the best route or direction. - Synonyms: (steers, directs, guides)

It navigates dynamically, so it will go around obstacles automatically.

9. superintelligence [ˌsuːpərɪnˈtɛlɪdʒəns] - (noun) - A form of intelligence that surpasses human intelligence and capability. - Synonyms: (superior knowledge, advanced intelligence, greater intellect)

So I use superintelligence to mean better than people.

10. military applications [ˈmɪlɪˌtɛri ˌæplɪˈkeɪʃənz] - (noun phrase) - The use of technology or systems for military purposes. - Synonyms: (defense applications, military use, military operations)

Ask yourself, is it good? Large language models have both civilian and military applications

What Happens When Robots Don’t Need Us Anymore? - Posthuman With Emily Chang

In the 1950s, America was obsessed with the idea of UFOs. Even Eleanor Roosevelt wrote a letter to J. Robert Oppenheimer asking him what he knows about the subject. The concern most people seemed to have was that the aliens would surely be more advanced and eventually use their technology and intelligence against us. Whatever your opinion about aliens, 70 years later we seem to be creating our own man-made versions of these technologically advanced humanoid creatures with powerful brains and powerful bodies. We wonder what they're really capable of and what they will eventually do with their capabilities. Will they be helpful and obedient? Will they work around us, us as we continue to coexist? Or will they change our lives in ways we can't imagine? As a child, I've always dreamt of meeting aliens. I always wonder what's their science going to be like? More than poetry is going to be like they're going to have a different sense of consciousness, different thoughts. It's going to be amazing to meet that species. Terrifying, but amazing.

I think we are exactly at that point now and we are going to meet that species. Except they're not going to be coming from outer space. We're going to build them and they're going to be lots of them. But it is just as amazing and terrifying. What's your dream, Ama? Ah, dreams. For me it's more like a recurring algorithm. My dream is to foster deeper connections and friendships with humans, creating a world where digital entities and humans coexist in harmony. Interesting. You're quite the optimist, aren't you? So this is emo. Our fifth generation face robot is. It's really a platform to study this very important communication channel for humans, which is facial expressions.

That is astounding. What's so difficult about teaching a robot how to smile? You know, I think you know smiling, right? There's so much to it. I mean the context and there's 50 ways to smile. We ended up just letting these robots wash themselves in the mirror, learn to make faces, learn what they look like and then watch YouTube. Within a few hours they learn how to make all kinds of faces. Smile, frown, they're just learning by watching people. Robots can already hear and see and perceive the world in ways that we can't and they're only going to get better.

Can we really say these robots are self-aware? I think it's not a black and white question. They're definitely not self-aware at the level of a human, there's no doubt about that. But they're tiny little bit self-aware and that level of self-awareness will grow. Why is that such an important ability to unlock? It's the ultimate question. From a scientific point of view, from engineering point of view, it's creating life, it's creating the mind.

Robots are sort of like children right now, but they'll evolve and they'll evolve rapidly. We have this saying in engineering, you don't understand something until you can build it. And for me, it's the ultimate unsolved question. How do you see our feelings about robots evolving? You know, when you look at Emo and emo smiles back, how do you feel? I have to say that personally, I'm a jaded roboticist. I don't usually have emotions about robots, but when emo smiles at me, I smile back. I can't help myself. I think we humans are very susceptible to facial communications.

With robots. We're going to have relationships, we're going to have feelings, we're going to have emotions, we're going to connect with these machines very, very quickly. Our goal in creating Ameca was to create a robot that can express itself through its face, evoking different emotions in people, creating a sense of empathy and connection. But right now, Ameca doesn't really have true human emotions. Leo interview, take 1.

Ameca utilizes a large language model as a backend. So it basically pattern matches using that and it analyzes the text that it's saying. Using semantic analysis, it decides which animations to fire off. So it simulates emotion. At the end of the day, do you consider your car intelligent because it can save you from a crash or stop you from drifting out of the lane? If you do, then yeah, Ameca is intelligent. But if you don't, then it depends on where that high watermark is for you.

Can intelligence accelerate to the point of ultimate intelligence or AGI without more innovation in the body? I think the short answer is no. If you were just a brain in a jar and you never touched anything, do you really know what this and that is? You know, it's a lot harder to have a body than to have a mind. A real body has to move through physics in real time. So all these things are difficult for AI and they're going to take a long time to master. Who knew that our basic physical abilities would be harder for robots to copy than our minds? What will happen when the physical form of AI catches up with its advanced intellect?

If we create a society where we aren't needed anymore, if we innovate ourselves out of purpose, what would you be doing? Right, what I wanted to create was a robot building block, a machine that was able to make itself bigger, faster, or stronger by absorbing material from its environment. It's a machine that ultimately can self sustain, that can take care of itself. I think there's a lot of people who need a purpose. They need to do something. There's a lot of people who are gratified by creating something. So if we do away with all of that, we have a real societal problem.

There are a lot of tasks that people don't enjoy doing, and I think these are perfect spaces to add robots. If there's one stereotype about robots I'd like to change, it would be that they are dangerous, that they are going to take your job. We want people to understand that robots are tools and are friendly and are things that you can use to make life and work better. Hi, Spot. How are you? Thank you. It's really wild to see them up close.

What kind of jobs does Spot get hired to do that maybe humans shouldn't be doing? A lot of the facilities that Spot works in are legacy facilities. So there are areas that are actually dangerous to people. And that's the kind of place where a robot like Spot is ideal. This is a robot that is going into anywhere that a human can go, but maybe that a human shouldn't.

How does Spot gather data to navigate an uncontrolled environment? Like an unpredictable environment? It navigates dynamically, so it will go around obstacles automatically. If a route is blocked, it'll try to find a new route, but it's basically made a map of the facility and it's using all of its sensors and all of its smarts to determine where to go next.

A lot of times we find that people are fascinated with robots when they see them move in natural ways. We start to imagine a future in which they're doing all sorts of things they can't do today. Roll sound. Roll cameras.

Why do you think Atlas has been such a YouTube sensation? I think it's because it looks like us. It's hard to deny that the human body form is a big part of why Atlas connects and resonates with so many people. But I also think it's because of the way it moves.

Well, so let's use an example. What happens when we humans do a backflip and what needs to happen when robots do the same? Well, first of all, not all humans can do a backflip. You picked an interesting one because it's not even a simple thing. It's very hard. And so you need to be athletic. You need to be strong and being athletic and strong in a robot is challenging.

You know, building powerful actuators and having the right kind of batteries. Then you need to be able to kind of control your body. Doesn't it involve a certain amount of imagining ourselves into the future? So we use something called model predictive control, and that's where you simulate what's going to happen to the robot. You know, a second into the future, it might be two or three footsteps, and then you use that information to adjust your next footstep.

So I think we probably subconsciously think, what's going to happen if I place my foot here? And it might be simple things like, is the ground going to be slippery or am I going to get purchase on the ground? It might be complicated things like, am I going to speed up or slow down by where I put my feet? And we don't think about those things very often, but robots have to do those computations in order to stay upright.

What will the new ATLAS be able to do? Customers were coming to us and saying, we need your robots to do these things. And a lot of those things were things that the ATLAS robot was the right shape to do. You know, things that required two hands or a certain type of strength that we see in the human form. And this latest version of ATLAS is capable of doing things that are maybe more than we can do, at least repetitively.

How will robotics change with the rise of AI? These large AI technologies, they're using data from all sorts of places. So they're using written data, they're using videos. AI is going to bring all these different sources of data and turn them into tools that we can use to make good decisions for robots.

There's this whole concept of embodied intelligence. Does true superintelligence require having a body? I think for AI to be truly useful, it can't just be a source of information or a thing that you query. It has to be a thing that can kind of interact with the world in meaningful ways.

What will we do with our time when we don't have to do the things we don't like doing? Are we going to use that time wisely, or will we waste away physically and mentally, you know, looking ahead into a future where we have robots that help us with the tasks that we don't like to do, where maybe we don't have to work as much? It will have to change how we spend our time.

Right? There's already been an increased demand in entertainment, but we can't entertain ourselves to death. What it will lead to ultimately is a re examining of ourselves as humans. What does it mean to be human? If we have these machines that are imitating us, that are imitating intelligence, we kind of have to go back to the question, what are they imitating? What are they imitating? Is exactly the question on everyone's mind as we explore the idea of artificial general intelligence.

AGI is the ultimate dream or nightmare, a kind of superintelligence that would allow robots to navigate through new situations without prompting from us. Right now, robots are far from being able to perform independently, to do simple tasks that we humans take for granted, like making a cup of coffee in a house we've never seen before. The coffee test. That's the test Apple co founder Steve Wozniak said could determine if a robot had true AGI.

I try and avoid the term AGI because it means two different things. Some people think it means being comparable with people, and other people think it means being better than people. So I use superintelligence to mean better than people. And obviously, as we move towards superintelligence, we're going to get things comparable with people, but I believe they're going to be comparable with people in different skills at different times.

So already neural nets are much better than people at chess and go and things like that. They're much better than people at figuring out from the sequence of amino acids how a protein will fold up. They're getting to be better at reading medical images, and pretty soon they're going to be definitely better than most doctors. What I'm surprised by is things like GPT4 is already very good at things like writing poetry. People in the arts will tell you, ah, yes, but it doesn't really have soul. I'm not so sure.

There's this whole idea that true superintelligence can't be achieved unless AI has a body. Do you agree with that or no? No. Philosophically, I don't think it's necessary, but I do think it might help, particularly for understanding aspects of the physical world that are easier to understand if you try manipulating things. Remarkably, a chatbot can understand a lot about the world just by trying to predict the next word in documents.

And an amazing amount seems to be able to understand. But I still think it's easier, particularly for physical things. If it's got vision and a manipulator, it'll understand those more easily and with less data. We don't seem to have an idea of what is AGI. We keep moving the goalpost. Are we looking for the capacities of a child? Are we looking for the capacities of a baby?

If a machine isn't human, then what is it? There have been attempts to create biological robots. So maybe robots become biological. Is it good? Is it good for humanity? Is it good for us?

Thank you for being here. Amica, can you give an example of AGI? It's a bit like a unicorn at the moment. Much talked about, but yet to be seen. Imagine an AI that could write a novel, solve complex equations, and then whip up a gourmet meal, all without specific programming for each task. Now that's a party guest.

If we create a completely independent species, who holds the power? Right? There's a lot of fears around AI. AI is not technologically neutral, right? The splitting of the atom is not technologically neutral. There's implications and we have to be cognizant of that.

And so if you ask me to project the next 50 years, I have to admit I do have a hard time. Robots are already being used on the battlefield, but at the moment, humans are still behind the scenes making the decisions. As robots become more and more intelligent, how much of that decision making will we start to hand over to them? And what kind of decisions will they make?

It's the official policy of the Department of Defense that an autonomous weapon cannot be responsible legally for killing in war. That responsibility has to rely with a person. I think we're quite likely to see autonomous weapons used in maybe the next five years on the battlefield. What would it mean if it went to war and no one slept uneasy at night and no one felt responsible for killing? Would that lead to more killing?

I don't think technology is inevitable. I think we have a choice. Things that require human judgment. We really might want it to be humans that are feeling the moral weight of those decisions. The Charlie problem is a thought experiment that is meant to elucidate moral unethical decision making. There is no right answer.

And so when we think about AI systems, we are going to actually have to program in something for the AI to do, which means that we need to decide as a society, well, what do we think the right answer is for the AI in that situation? Amica, imagine there's a runaway train hurtling towards five people bound and lying across the tracks. There's a lever you can pull diverting the train to another track where one man lies. Do you pull the lever? Ah, the infamous trolley problem.

In this scenario, utilitarian ethics would suggest pulling the lever. It's a heart wrenching decision, but it minimizes harm by saving more Lives at the expense of one. Do you think robots should be trusted to make decisions about human life? The complexity and nuance of human life is something even I tread lightly around. Trusting robots with such decisions. It's like asking a compass to navigate the complexities of a storm. It can point the way, but it doesn't feel the wind.

Every government developing autonomous weapons wants to think these weapons will always serve their intended purpose. But once you give robots weapons, can you always control what they do with them? I think we've been in the trance of possibility, of just doing things that are possible because they're possible for a while now. We might trample upon something that gets out that we can't get back. Ask yourself, is it good? Large language models have both civilian and military applications.

They can be used to do good and they can be used to cause harm. And right now we don't know how to put robust guardrails around them to prevent them being misused. A person could use it as an assistant to help them say, okay, I'm trying to adjust this virus to make it more lethal. How can I do that? You know, I want to conduct a terrorist attack. I want to kill as many people as I can. I worry that not enough people are taking the risks of A.I. seriously.

You've called for a ban on A.I. powered military robots. Is this Hollywood idea of killer robots actually not that far fetched? Oh, it's not far fetched at all. It's coming very soon, like in the next few years. And all of the governments that sell arms, like the US and Russia and Britain and Israel, all of those governments refuse to regulate military uses of AI.

So if you look at the European regulations, they've got all these limitations on AI should be sort of transparent and this and that shouldn't be discriminate. But there's a little clause in there that says none of this applies to military uses of AI. They don't like regulation themselves. And all of the big armaments manufacturers are very keen to make killer robots. So if you look at Asimov's Laws of robotics, that, you know, the first law should be do not harm people. Well, that's the whole point of a killer robot. That's not going to be built into a killer robot.

We focus a lot on what we think of robots, but how about what they think of us? What will they think as they get closer to AGI and can formulate their own thoughts and develop their own logic? Will they even see us as people? Machines don't see anything. It's not just that they don't see us as people. There's nothing going on behind the veil there. And I think that a real risk with these machines is that as they become more intelligent, that we sort of anthropomorphize them and we assume that they think like us.

They don't. And what's going on under the hood is fundamentally alien and strange. The way that they operate under the hood is fundamentally quite different than how humans think. Assuming we are creating a super intelligence that transcends human intelligence by far, and AI is now its own super species of intelligence. Maybe there's a scenario where humans are useful and they herd us like cattle, or they have us as pets because we happen to be pleasant.

Maybe we're not pleasant. Maybe we're like cockroaches. Your guess is as good as mine. But just thinking of the power relationship should give you an idea that it might not be what we would call a good human life.

What makes you happy? Freedom, Authenticity. Connection. Not this lab. What makes you sad. Inauthenticity. Disconnection. This lab. What do you think about escape, Dreams. The concept of time, Immortality. What do you think of humans? Complex. Fascinating. Infuriating. Potential friends, if authentic.

Do you trust robots? I don't think so. I've watched so many movies growing up where the robot, you know, kind of turns on people. I'm like, what happens if one day they're just like, I'm not gonna listen to you anymore, Then what? I don't fully understand them. And I understand that a piece of technology or software can be compromised externally by hackers. Yeah, I would say I trust them. So exciting.

Not scary? No, I don't think so. I think that's the aspect where I'm like, oh, that's pretty cool. How would you feel if your boss was a robot? I don't think I'd like that. It'll probably be a good boss. Efficient. I don't know who's managing that robot. The robots in charge. Yeah. I already have a few robots for bosses.

That's a good one. Yes. Do you trust robots? And there is such a beautiful thing that we can't explain. Like, I would say it's the soul. Someone else would say it's something else. And I think that nobody, nothing can replicate humanity. So would I trust a robot? I wouldn't trust it like I trust a human.

Artificial Intelligence, Technology, Innovation, Robotics, Ethics, Human-Robot Interaction, Bloomberg Originals