This video explores the phenomenon of hallucinations in artificial intelligence models. The discussion centers on understanding what causes these errors, how models generate answers, and why they sometimes produce incorrect information while appearing confident in their accuracy. It reviews the concept of AI hallucinations, using real-world examples to illustrate how models can confidently present fabricated information.

The video investigates possible strategies to mitigate hallucinations, such as instructing AI not to fabricate answers, though this method proves insufficient due to the nature of AI's knowledge generation. Other strategies discussed include using consistent regulatory processes like repeated questioning, embedding comparison, augmenting with known sources, and fine-tuning models. The focus is on improving training datasets and possibly developing new verification steps for future technologies.

Main takeaways from the video:

Please remember to turn on the CC button to view the subtitles.

Key Vocabularies and Common Phrases:

1. hallucinations [həˌluːsɪˈneɪʃənz] - (n.) - Errors in AI output where the model provides incorrect or fabricated information while being confident. - Synonyms: (delusions, fabrications, misperceptions)

So what are hallucinations?

2. bogus [ˈboʊɡəs] - (adj.) - Not genuine; fake or false. - Synonyms: (fake, false, fraudulent)

It seemed like a slam dunk for the plaintiff, but in reality, every single example provided was bogus.

3. plaintiff [ˈpleɪntɪf] - (n.) - A person who brings a case against another in a court of law. - Synonyms: (complainant, applicant, accuser)

It seemed like a slam dunk for the plaintiff, but in reality, every single example provided was bogus.

4. semantic [sɪˈmæntɪk] - (adj.) - Relating to meaning in language or logic. - Synonyms: (meaningful, interpretive, lingual)

It creates an array of floating point numbers that represent the semantic meaning of the text.

5. embeddings [ɛmˈbɛdɪŋz] - (n.) - Vectors or numerical representations of data features used in AI to capture semantic meaning. - Synonyms: (vectors, representations, mappings)

Remember we talked about embeddings earlier in this course? It's one of the key concepts with retrieval, augmented generation

6. augmented [ɔːɡˈmɛntɪd] - (adj.) - Made greater in size or value; enhanced. - Synonyms: (enhanced, increased, boosted)

It's one of the key concepts with retrieval, augmented generation.

7. fine-tuning [faɪn-ˈtuːnɪŋ] - (v.) - Making small adjustments to improve or perfect something. - Synonyms: (adjusting, calibrating, refining)

Although you aren't really adding new knowledge with fine tuning, you're telling the model which of the concepts it knows about are more important.

8. kludgy [ˈkluːdʒi] - (adj.) - Inelegant but effective solutions to technical problems; makeshift. - Synonyms: (inelegant, makeshift, awkward)

Maybe someone will come up with another answer verification step in the future, but most attempts at that have been kludgy at best.

9. mitigate [ˈmɪtɪˌɡeɪt] - (v.) - To make less severe, serious, or painful. - Synonyms: (alleviate, lessen, diminish)

The video investigates possible strategies to mitigate hallucinations, such as instructing AI not to fabricate answers.

10. verification [ˌvɛrɪfɪˈkeɪʃən] - (n.) - The process of establishing the truth, accuracy, or validity of something. - Synonyms: (confirmation, validation, substantiation)

Maybe someone will come up with another answer verification step in the future, but most attempts at that have been kludgy at best

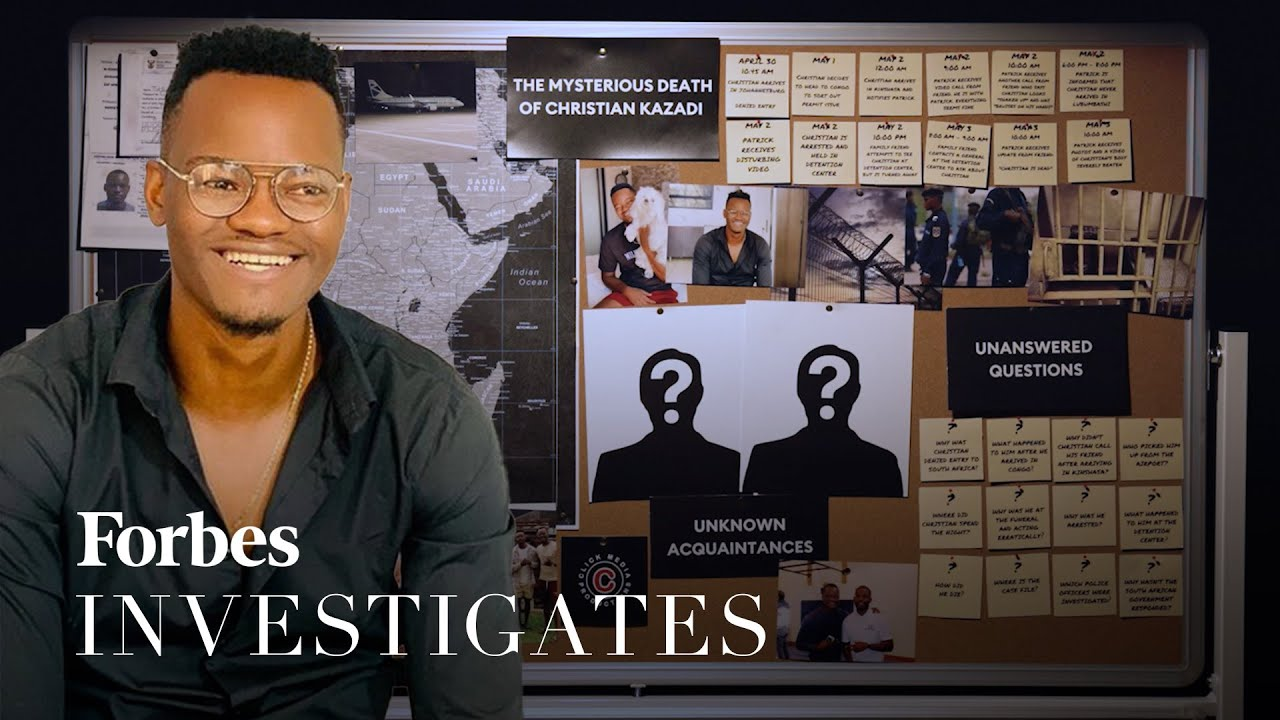

The Mysterious Death Of Forbes Under 30 African Entrepreneur Christian Kazadi - Forbes

Imagine a world where AI never hallucinates. But is that actually possible? These models are supposed to have the knowledge of the world. So how do they make these mistakes? What are they and where do they come from? In this video we're going to try to get a better understanding of what causes these errors in the first place, and also to look to see if there are any strategies to avoid them. A common strategy that I've seen folks use is to simply add to the prompt something like, if you don't know the answer, don't make up something. Instead. This approach may seem straightforward enough, but it's not as easy as that. You may have even tried that and it might have even felt like it worked sometimes, but it doesn't overall. So what does a model know about what it knows and how does it generate an answer? That's probably the key thing to try to understand, to figure out what hallucinations are and how they happen.

Now, I'm Matt Williams and I was a founding maintainer of the Olama project. I'm no longer part of the team, but I'm just as passionate about what it can do in the hands of folks that understand it. And so I started putting together this free course on Olama to help get it into more hands and see more cool projects come out of it. So what are hallucinations? I guess the simple version is that they're where the model gets the answer completely wrong and yet it seems super confident that it's correct. One of the classic examples of this was a lawyer representing a guy whose leg was hurt after the beverage cart on I think Avianca Airlines flight ran into it. The lawyer filed a brief to the court showing how his client would win the lawsuit based on a collection of previous symbol similar cases. It seemed like a slam dunk for the plaintiff, but in reality, every single example provided was bogus. They didn't exist, but each one sounded convincing and included an equally convincing looking URL to get more information. But the lawyer never clicked those links to verify and he got into trouble for it. So whenever working with AI models, it's always important to Verify your results with known good sources. And a known good source isn't always what is factually true.

I think I spent a while trying to figure out what's the right way to say that. Maybe it's better to just give some examples. We know that the earth is roughly a sphere, but there is a percentage of folks who believe it is flat. So if a model says that the world is flat, is that a hallucination or in the U.S. the 2020 election was fairly won by one party, as confirmed by every court case that has come up, but portions of the other party's supporters believe it was stolen. If the model says something about it one way or the other, is the model hallucinating? Maybe it depends on the eye of the beholder. But hopefully you think this video is awesome no matter what you believe.

And if you like this one, there are many, many more to come in this course and on my channel. The best way to learn about new videos as they come out is to like this one and subscribe to the channel. I'm working on my first million subscribers and your support will help me get the missing 963,000 subs that I need to get there.

Okay, back to hallucinations. So how do hallucinations happen? Well, there are a couple reasons for them. First is the data set that the models are trained on. The sources come from all over the Internet. And yeah, I don't know if you've looked around lately, but there's some pretty crazy people on the Internet. They believe in all kinds of strange things and the model is trained on the sane stuff and that other stuff. So it stands to reason that some of the crazy stuff shows up in the outputs. In fact, one researcher pushed crazy stuff into the model intentionally to see if they could taint the model over time. And they did.

The other big reason for hallucinations is that models don't know what they know. They don't actually know anything. In fact, the term hallucination is not a very accurate term because the answer isn't a departure from what the model knows to be true. They just figured out based on the context, what is statistically the next most likely word. You can't tell a model to not make something up because it doesn't have any concept of what's true or not. And just because a model hallucinates one time doesn't mean it's going to give the same wacky answer every time you ask the same question. That's why asking the model to not make up stuff feels like it works sometimes, even if it didn't actually change anything.

This idea leads to one of the thoughts for a solution to the problem. It's a multi step process. First you ask a question and get back an answer from the model. Then you ask that question again and again and again and again and so forth. You get back a whole bunch of answers. Now create an embedding for each of those answers. Remember we talked about embeddings earlier in this course? It's one of the key concepts with retrieval, augmented generation. It creates an array of floating point numbers that represent the semantic meaning of the text. Then you compare all the embeddings from all the results. And if all of them, or a large group of them are relatively close to each other, the thought is that these answers contain fewer hallucinations. Maybe a safer approach is to have the model rely on known good sources.

And we've covered two different ways of adding those. First we looked at rag, and then we looked at adding web search results. Of course you have to add documents or web pages that agree with your view of the world. If you're team science, then maybe you point to NASA and the European Space Agency and other similar sources. But if you're a flat earther, then you look to. I don't know. I don't know where you go for that stuff. Anyway, point to the places that contain your version of the truth. Fine tuning can help with this too. Although you aren't really adding new knowledge with fine tuning, you're telling the model which of the concepts it knows about are more important. And that can lead it to come up with answers that don't rely on unrelated concepts, which would lead to answers with more hallucinations.

The best way to get rid of the hallucinations is to improve the source data sets when training the model. And it looks like that has generally happened with each new model. I feel like reports of hallucinations have gone down a bit with each model, but then again, each new model seems to continue to have them. Maybe folks are just more used to them and accept them as a known downside. Despite being what some think is the state of the art, users of GPT 4.0 and 01 and Claude and others still report plenty of hallucinations in the outputs. So will we get to a point where hallucinations go away? Probably not. At least not using the current technology. Models will continue to get better, but will always have what are referred to as hallucinations. It's not until whatever comes after large language models that we may have a way to eliminate them from that initial answer generation step.

Maybe someone will come up with another answer verification step in the future, but most attempts at that have been kludgy at best. So while I would love to say there's an answer to hallucinations that will make them go away forever, there isn't. They can be frustrating, but that doesn't mean that LLMs aren't amazing at what they do. You should still leverage their strengths and they can let you accomplish some pretty incredible things. Hopefully you now understand them a bit more and why they come up. What's the craziest hallucination you've seen when working with models? Share them in the comments below. I'd love to see the crazy stuff models come up with. Well, that's about all for this video in the free Ollama course Again, click subscribe to find out about the next one when it comes out. Thanks so much for watching. Goodbye.

Artificial Intelligence, Technology, Education, Ai Hallucinations, Model Training, Error Mitigation, Forbes