The video documents a visit to Vancouver, where a startup company, Sanctuary AI, is developing a highly capable and lifelike robot infused with artificial intelligence. Upon crossing the border from Seattle, the viewer is introduced to the unique and picturesque scenery of Vancouver. The journey is more than a scenic exploration; it's an investigative dive into the future of robotics at Sanctuary AI, revealing their advances in creating a humanoid robot equipped with advanced dexterous hands and autonomous capabilities.

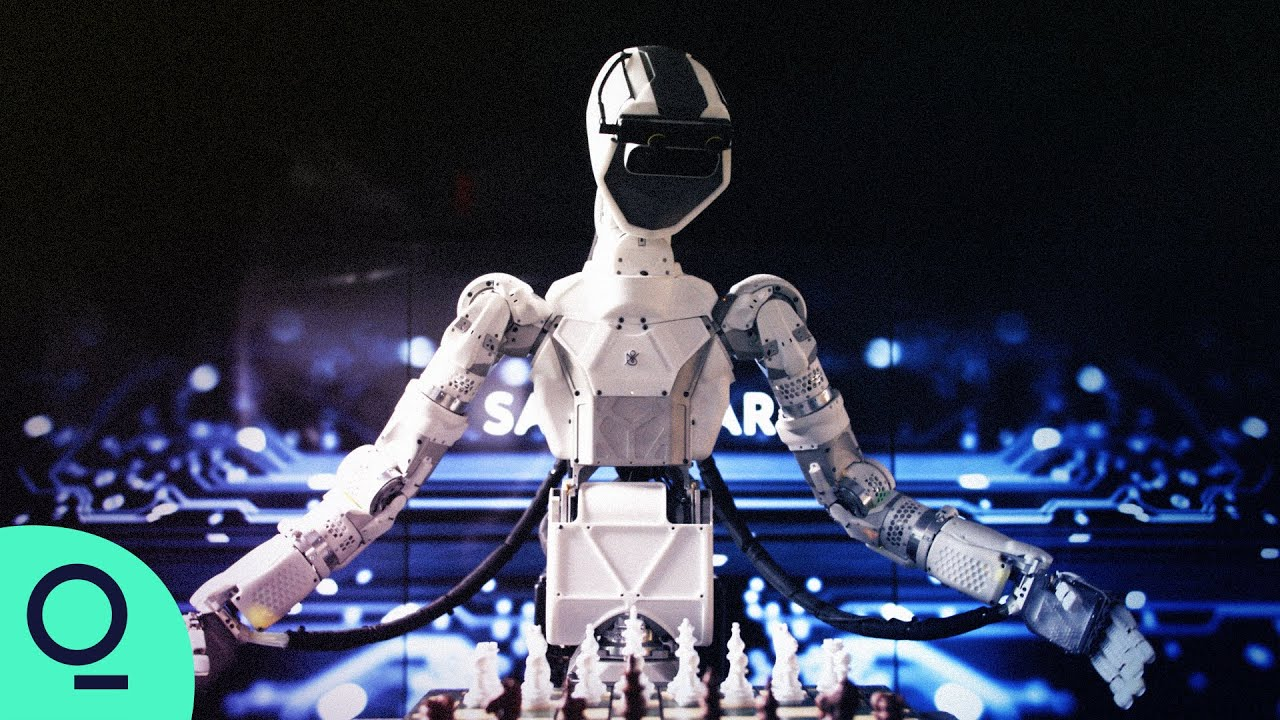

The core function of these humanoid robots, specifically Eric, is demonstrated with tasks that mimic real-world complexity, like playing chess. The video explores the technology's ability to perform tasks with human-like dexterity, assisted by human operators for data training, as the company aims to develop a fully autonomous system eventually. The vision is for robots to work alongside humans, supporting labor markets by filling job gaps where human labor is unavailable or insufficient.

Main takeaways from the video:

Please remember to turn on the CC button to view the subtitles.

Key Vocabularies and Common Phrases:

1. dexterity [dɛkˈstɛrɪti] - (noun) - Skill and grace in physical movement, especially in the use of hands. - Synonyms: (agility, nimbleness, adroitness)

And then a big part of that would be the dexterity of the hands.

2. android [ˈænˌdrɔɪd] - (noun) - A robot designed to resemble a human, both in appearance and functionality. - Synonyms: (humanoid robot, machine person, robotic figure)

Sanctuary claims to have the smartest, most capable android on the planet.

3. autonomous [ɔˈtɒnəməs] - (adjective) - Capable of operating independently without human intervention. - Synonyms: (independent, self-governing, self-sufficient)

So what you're actually seeing is fully autonomous grasping and manipulation of an object.

4. humanoid [ˈhjuːməˌnɔɪd] - (adjective / noun) - Resembling human form or having human characteristics. - Synonyms: (anthropoid, manlike, people-like)

This is a general purpose robot. It's a humanoid in form and function.

5. piloting [ˈpaɪlətɪŋ] - (noun) - The act of controlling or operating a vehicle or vessel. - Synonyms: (navigating, steering, guiding)

Okay? So if you remember earlier, we saw the VR type of piloting, imagine now, instead of having to control the robot with all this VR gear, you just have a drop down list of options

6. manipulation [məˌnɪpjəˈleɪʃən] - (noun) - The action of handling or controlling something skillfully. - Synonyms: (operation, maneuvering, handling)

So what you're actually seeing is fully autonomous grasping and manipulation of an object.

7. mundane [ˈmʌndeɪn] - (adjective) - Commonplace or ordinary; not interesting. - Synonyms: (boring, routine, banal)

So I'd like to see these robots doing the sort of boring, mundane parts of tasks.

8. discrepancies [dɪˈskrepənsiz] - (noun) - Differences or inconsistencies between two things. - Synonyms: (variations, divergences, incongruities)

There are still some discrepancies between reality and the simulation, but that gap is closing all the time.

9. depiction [dɪˈpɪkʃən] - (noun) - The action or result of showing something in a form, especially art or literature. - Synonyms: (portrayal, representation, illustration)

This one we call the outer world model. So this is a digital twin of reality, and this is a depiction of the world.

10. conception [kənˈsɛpʃən] - (noun) - The ability to form or understand an idea or plan. - Synonyms: (idea, understanding, notion)

What you guys are making is still sort of, in some ways, people's first conception of where all this was heading.

The World's Smartest Robot Is Living in Vancouver

A road trip from Seattle to Vancouver takes about 3 hours. There's pretty scenery to look at along the way and a really exciting border to cross. Once you make it into British Columbia, everything changes in an instant. It's like entering another world. In Vancouver, most public servants are required to drive Teslas. So you see the things just about everywhere. All of the clocks here run on steam and play songs, and the airports are in the water. Oddities like these aside, it would be hard to dream up a more beautiful city. Beautiful mountains, beautiful buildings, beautiful geese, beautiful graffiti complimented by beautiful filthy alleyways. And if you know the right spots, you can eat like a king. In Vancouver, this counts as a light lunch in Chinatown. That is good. And if you know the right guy at the right hole in the wall, you can get what you need.

But I'm in Vancouver for more than just mainlining maple syrup. There are people who would say that building a super lifelike, extremely intelligent robot is a terrible idea, the kind of thing that could lead to the downfall of the human species. But today I will visit a startup called Sanctuary AI. They've built this crazy robot that has artificial intelligence baked into it, and it also has these very lifelike hands. Sanctuary claims to have the smartest, most capable android on the planet. The only thing we can say for certain is that because the robot is being built in Canada, it will be ridiculously polite. I'm sorry. Excuse me. My bad.

Sanctuary AI was started by a handful of engineers in 2019. The company's CEO is this fella, and its chief technology officer is Suzanne Gildart. If Suzanne looks familiar to some of you, that's because she's been on Hello World before. Her previous company was called Kindred, and it made robotic arms that could pick and place items in factories. Suzanne's new company is a lot like her old company, only better. Do you want to shake hands? Yeah, absolutely. Nice to meet you. Nice to meet you.

So this is a general purpose robot. It's a humanoid in form and function. It's designed to be good at doing work tasks. Do I call it an it? How do we refer? I usually refer to the robot as Eric. Eric is our pilot, who you'll see taking control of the robot. He will be our operator today. Hi, Eric. I think people have seen robots at a car factory, but, like, walk us through some of the advances you guys have made versus that type of machine.

Robots that you'll find in industry are special purpose, and they're programmed in a very different way. They're programmed to do a repetitive task over and over again. So that robot, it doesn't really know where things are. It's just executing some pre written routines. So what we're trying to do here is build a robot that is actually aware of its surroundings. It's building up a mental model of everything it's seeing in real time, and is therefore able to do different tasks, more general purpose tasks.

And then a big part of that would be the dexterity of the hands. I know that you guys have been focusing on that for quite some time. Yeah, these are sort of about the fourth generation hands that we built. So maybe, Eric, you can hold out your hand. I can show some of the features. So obviously a human like hand, four fingers and a thumbnail. These things you see here are sensors that gives the robot a sensor haptic sense of touch. When these pressure sensors are pressed, the data signals go into the software system. They're also relayed to the pilot.

So Eric has like, a glove on? Yes. And he can feel. Eric, you can feel that when you get poked. Yeah. Why don't we play a game of chess and we can talk through some of the components while we do that. All right, so you're white. You go first, right? Yeah, sounds good. Should have pre planned my move. I will be using the sicilian defense. Just. I'll let you know. Oh, seems I might be outmatched.

Eric, when you're doing this, I mean, are you just seeing the piece like I am, or there's a. Some sort of guide? Do you have a helmet on? Yes, I do. I have a VR headset. So what I'm seeing is stereo vision through the camera you're looking at. Yeah. You can almost see behind you, right? Yeah. Oh, I didn't even see the camera over here.

I think that probably if people saw this for the first time and then they hear that there's a human operator behind it, they would kind of be like, oh, well, that's not. I wanted the full robots. So a lot of people ask, well, you know, isn't this just a person behind the scenes? But having a person behind the scenes allows us to gather lots of very human like, training data to train the software system, train the AI algorithms to be more human like over time. So we're currently just using vrtaine rigs, sort of off the shelf gear, but we're planning on having some new rigs made that actually give force feedback to the pilot as well.

So, Eric, if you hold your arm out and I did this, the pilot would actually be able to feel the force feedback as well. These robots are designed to work alongside people, so we need them to be very safe. And one of the things about this robot and its motor system is it actually operates in a force control controlled mode. So what that means is the robot will try and hold the position of the arm, but if a maximum force is applied that is greater than a certain force, it will give.

Tell me a little bit about your background, just because I know you've been into electronics and computing since you were a kid, right? Well, I've loved electronics and robotics and things like that since I was little. I did a degree in physics and a PhD in physics and electronics, too. And then from there, I worked in the quantum computing space for a while and eventually found my way into AI. You grew up in the UK? Yeah, that's right.

I know, like, outside of this, you have all these interesting tech related pursuits. Yeah. So I have quite a lot of interesting hobbies that I do. I do like to do painting. They've kind of moved towards a more tech electronics theme, and they're actually inspired a lot by computer history. So a lot of the paintings you'll see are things like close ups of old microprocessors. There are even a few that are, like, robot power circuits. So, always thinking about robots. Whose move is it? Eric, I believe it's your turn. I'm not gonna lose to AI humanity team human. Okay. Okay. I'm okay with that strategy.

Chess is a really interesting game for several reasons. One is it's fairly easy now for software systems to solve the intellectual part of chess. So it's been historically used in AI many, many, many times for teaching machines. What we're really trying to show here, though, is that it's the parts of chess that people find easy, like picking up a piece, moving it, knowing where all the pieces are on the board. Those are the parts that people find very easy. Computer algorithms find that part extremely hard. But also, chess is a stand in for all different kinds of activities that you might have to do in the world of work. Maybe things need organizing in the right locations. You need to take stock of items in a retail store, or you could imagine, instead of chess pieces, these were small parts on an assembly or a sortation line.

I mean, the goal is eventually, then the robot is just smart enough. It's just doing its own thing with no human operator in some jobs, yeah, for certain tasks. So I'd like to see this robot system be able to automate the really mundane, boring parts. Sorry, I gotta readjust something. I would appreciate it if you gave me a minute or two. I'll just let him adjust that and move out of the way of his arm.

So I'd like to see these robots doing the sort of boring, mundane parts of tasks. And then the pilots are now freed up to be more like the overseers are the managers of a group of robots. So you can imagine a group of robots all being controlled by a single pilot. Almost like the person becomes more of a manager, and the robots become more like the manual workers.

Okay, so about 95% of all jobs, the dexterity of fingers precludes conventional robotic systems from doing them. So we have attempted to build the world's best robotic hands, because hands are really important for work. So we are working on integrating state of the art robots specifically for manipulation of the world through hands with a new kind of AI system that integrates a lot of the tools that people have been developing around the world into a platform that's intended to. To give these robots the kind of understanding of the world that is kind of a baseline for work.

It's interesting because I think there's definitely people who'd look at what you guys are making. They're like, oh, great, now you're gonna take all these factory jobs from people. Well, it's not taking jobs. In fact, I think that it's starting to be recognized that the problem that we face in terms of the employment labor market is actually the opposite problem. There aren't enough people to do the kinds of work that needs to get done to support the continued flourishing of civilization, our way of life.

There are more than 11 million unfilled positions in the US right now. The world needs more workers, especially in the kinds of jobs that people typically don't want to do. Those 11 million open jobs right now, those are the kinds of things that we're going to target, and that's a big number. 11 million jobs. It's only going to grow over time. Birth rates are plummeting, and 11 million robots of our sort in the world doing those jobs doesn't take a single job away from anyone. But 11 million robots out in the world is an enormous business. It's starting to become at the scale of the automotive industry. And I do believe that this is the birth of a new industry. It sort of looks like robotics, and it sort of looks like AI, but it's a new thing, and it's going to rival the biggest industries there are today in importance and scale when it gets going.

Okay, so you're moving in on me quick, distracting me with your fancy hands. I'm going to deal with your night, because this is really bothering me. Ah, my knights. Yeah, he's a troublemaker. Yeah. What did you get up to this weekend? You know, just robot stuff. A lot of chess. I was practicing for you. I know you're pretty good.

Ooh, I don't like that. I will take this. Put it over here. Not cool. Not cool. Oh, yeah. You don't miss a beat, man. All right. I will concede. I can see you've got me beat.

Very good game. Well played. Thank you, sir. Thank you. So, we saw Eric as the pilot, picking up the chess pieces quite easily, using his human mind. And here the robot is trying to learn what it means to actually pick up a chess piece in the same way that a human would. And it does it by trying over and over and over again.

So, on this screen over here, you can see a close up of it trying to grab a chess piece. And you'll see it sort of fumbles around a little bit at the start. But after enough training episodes, it will learn to grab it in a very human. There's not, obviously, 10,000 robot arms. This is all simulated. Yeah. So, here we can have thousands or hundreds of thousands of hands trying to pick things up faster than real time.

So you're accelerating the learning rate by millions of times. So the physics engine, the rules that have been put into the software to mimic, like, gravity and the. The different properties of our world, are good enough that this is actually training the hand? Yeah, they're getting there. So, if you think about where you could have done this many, like, a few years ago, you might think, oh, I could use a game engine, maybe, because game engines are pretty good simulated worlds. But then when you get deep into game engines, you find they're sort of optimized to make games look good. They have a lot of animation in them. They don't have a lot of real physics.

So we're actually bootstrapping on game engine technology, but they're in our systems going beyond that and trying to mimic physics very well. And so even something like the interaction of the end of a fingertip with an object has to be modeled very well. So you need a really good physics engine.

What do we have here? Yeah, so we saw the grasping algorithm being trained. What we're actually seeing here are those grasps being realized. It's taking what it's learned and putting it into practice. So it's motion planning, not just the grasp anymore, but that in combination with the entire movement of the robot body.

Your eyes kind of lit up when you're talking about this. Why? Yeah. So when you just look at this from the outside, it's like, oh, a virtual robot is moving chess piece, so what? But to me, this is like a spark of life moment, because what you're actually seeing is fully autonomous grasping and manipulation of an object. In a scene where none of this is pre programmed or specified, it's figuring out where those pieces are just by looking at the scene. Looking at them, you can see in this view, it's actually trying different potential grasps, and then it's actually executing the move using real physics.

Okay, you've got this virtual robot getting more sophisticated and doing these things. But like, in my head, I feel like the second you have the real robot, there's going to be all these differences in the chess piece and the physics between the hand and the pieces. There are still some discrepancies between reality and the simulation, but that gap is closing all the time. And to me, this could transfer and generalize to other types of objects, other scenarios.

So rather than just thinking about a chess game, think about cutlery being laid out in a restaurant or something like that. So what we're seeing now is we're seeing a little bit of a peek inside the AI mind when it's running. Okay? So if you remember earlier, we saw the VR type of piloting, imagine now, instead of having to control the robot with all this VR gear, you just have a drop down list of options. So you can see there are two screens here. This one we call the outer world model. So this is a digital twin of reality. This is a simulation of the world, and it's a simulated robot.

This one down here is the robot's mental model of the world. So this is inside its mind, and this is outside its mind. So you'll see the main difference between these two. At the moment, it's pretty obvious to there is a chessboard with the pieces here, and it's not here. So the reason is that the robot is not looking at the chessboard, and it's never seen it before, so it doesn't have a mental model of the chessboard. But say, I'm now a pilot here, I can use the command. Look down here.

We're now controlling the simulated robot. Look down. And now you can see the chessboard appeared here. So now it's building up this inner mental picture of what's going on in real time. Yeah, what's good? They're all like moving around, so they jostling around a little bit. And the reason is that these are all being detected and figured out what they are in real time.

So, okay, so this is super interesting, what happened down here. A bunch of the pieces have disappeared. It's, like blocking its vision. Yeah. So, see, the hand is in front of the pieces. I think it's like a six month old baby. If you show it something and then you hide it, like peekaboo or something, it thinks it's gone. So that's a very human like trait of a developing mind to go through this stage where if you can't see it, it doesn't exist.

So what we really want to get to here is that this robot, in any real world scenario, would roll into a room, it would look around, and it would start building a mental model of everything it was seeing in real time, including the room and all the objects and what they are and where they're located.

It's funny because in some ways, I'm picturing my head like 1960s Sci-Fi like an android that is moving around. And what you guys are making is still sort of, in some ways, people's first conception of where all this was heading. Well, I think so, yeah. And I like to think of sanctuary's mission as going back to this sort of original vision of AI. When people like Alan Turing and Marvin Minsky and these people were thinking about, how do we actually make a machine or a system that thinks and behaves like a person?

If we want systems to be able to work in the same way people do, interact with us in the same way people do, they need a human like body and a very human like intelligence. And you think the dystopian stuff is all, you're optimistic by nature, or you think it's all overblown. And if we manage to develop fully autonomous AI in a general purpose robot, you might ask, what will that look like? How will it change the world? What's going to be going on? But you saw it earlier, right? It'll look exactly like it did when Eric was piloting. It's just there won't be a person in a VR suit in there. So I would ask you, well, was that scary and terrifying and dystopian?

Right. And I also think if you're focusing on a very optimistic future where you have robots working alongside people, helping people, I think you will make that future come to pass. You get people accusing you of being naively optimistic, but I think you need that if you want to, to make that future real. And if you're really optimistic about it. It's more likely to happen.

I promise. I'm smiling behind the camera. Do robots say cheese? Yeah, absolutely. All right. One, two, three. Cheese. Cheese.

Technology, Innovation, Artificial Intelligence, Vancouver Robotics, Humanoid Robots, Future Of Work, Bloomberg Originals